An enquiry into the essence of energy, information and time.

“Everything flows and nothing stays” — Heraclitus

Things change. As I type this, I watch words emerge before my eyes. Pixels evolve into recognizable symbols. Which is to say, time passes. It’s later now than when I first sat down at this table a few minutes ago. My coffee cooler. Laptop battery less charged. Though I can’t observe it, I’m sure there’s been some minute change in my cells as well. And the cells of those I love.

Entropy underlies all of this. A concept that has permeated my mind over the past 5 years, slowly diffusing into my core beliefs. Entropy manifests itself in many fields yet remains profound in each of them. And somehow, despite these varied contexts, it remains powerfully coherent. The following pages are a brief exploration of those contexts — the ones that seem most important to me at least. Bitcoin, as an agent of entropy, will invariably be discussed. But this is not an essay about Bitcoin. It is an essay about energy, the engine of life, and time itself. Let us begin.

There is energy. It’s not a thing, but a quality. A capacity to do work. Three laws seem to govern its behavior.

The first is conservation. Energy is neither created nor destroyed. It can be converted into other forms, but the aggregate amount is conserved.

The second describes energy’s natural flow. In simple terms, the energy in a system will naturally disperse to become more evenly-distributed, diffuse, and less-ordered over time. Entropy is a measure of this disorder; a term derived from the Greek word “tropē,” translated as a “turning” or “transformation.”

Examples remain the best way to illustrate the concept. A hot body touching a cold one will give off heat until the two are the same temperature (equilibrium). Ice melts.

Or instead, imagine a box with gas particles bundled tightly in a corner. Over time the particles will spread out, becoming more diffuse, randomly bouncing around the container until it appears as though they are evenly distributed.

Importantly, this flow is probabilistic. We cannot predict the exact position of these particles after a given unit of time, but we can determine the probability of different distributions. And if the gas is diffuse, it is very improbable that its constituent particles will regroup into that initial tight bundle in the corner.

Entropy therefore tells us how the energy of a system is distributed among its parts. Heuristically speaking, low entropy states have a high variance in energy content and composition. Dense pockets and empty space. A cold body surrounded by heat. Or the opposite. Both alike in that they are thermodynamically improbable. Both far from equilibrium. We call this order because there is distinction. On the other hand, high entropy states are composed of matter and energy that are diffuse, spread out, randomly distributed, perhaps to the point of seeming homogeneity. Words like disorder and chaos are often used to describe them, lacking in distinguishable complexity and structure. In this way, thermal equilibrium is itself the state that has the highest possible entropy for a given system; the heat has nowhere else to flow.

So then entropy is the natural tendency for things to flow from more to less ordered states. Order… naturally gives way to more diffuse, random, and chaotic disorder.

There is also a third law that tells us of the theoretical minimum temperature (absolute zero, a state at which entropy is at its minimum,) but it is less relevant to this essay. With these laws in mind, we can proceed.

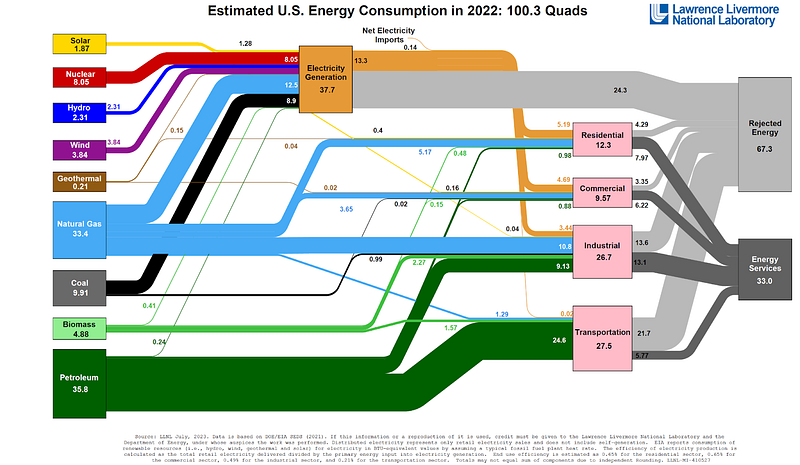

Every energy conversion comes with a loss of useful energy, which is to say, an increase in entropy. Waste heat is an inevitable byproduct of doing useful work.

A boulder rolls down a mountain; the potential energy of its elevated position is converted not only into motion but also to heat–the friction as it brushes past other rocks. Gasoline vapor combusts, a piston moves, the engine turns; at most 30% of the fuel’s energy is used to rotate the wheels forward, the rest is lost as heat and exhaust, radiating out into the universe. The most efficient power plants (combined cycle natural gas) only harness 60% of the fuel’s energy; even recapturing the waste heat from the turbine for a second cycle of electricity generation leaves 40% of the methane’s energy unutilized. With each energy conversion, more and more waste heat is produced; entropy inevitably continues to increase.

So energy is preserved, but its order decreases. That is, unless we add energy to the system.

It’s a hot summer day. To cool down your living room, fuel in a distant power plant is burned to energize your air conditioning. Place a hand on either side of the AC unit; again we find entropy in action, albeit at a smaller scale. When we build the next great cathedral, joules of some kind will go to waste as we lift the stone to craft the spire. It is only from the calories Sysiphus consumed that he is able to push a boulder up the mountain in the first place

Energy is never actually “produced.” It is refined. This refinement is a local reduction of entropy; while even more entropy (waste heat) is shed elsewhere. Energy technologies make this possible. As the above examples illustrate, energy can be more or less easy to use. To this end, we have developed a global apparatus of infrastructure to channel energy into more servile forms. This already happens today, everywhere around us.

The gas in your car is the end product of a long supply chain. The hydrocarbon is extracted, refined, and transported to where you want it, when you want it; much heat is shed in the process. Your “renewables” are no different; minerals extracted from around the world shipped via diesel freighter to coal-powered factories where they’re fashioned into panels and blades to catch diffuse sunshine or the intermittent breeze, shipped again via hydrocarbons to a site near you, where it converts a fraction of that “renewable” energy to electricity, at least part of the time, until they need to be replaced. Again much heat is shed in the process, and much carbon as well (to say nothing of forced labor). This too is a dirty little secret of the green movement: the West is not eliminating carbon emissions, just outsourcing them. The entropy must go somewhere.

The telos of these technologies is to channel energy to our benefit. Since man first harnessed fire, we have continued to tinker, finding better and better tools to do so. These tools and the knowledge of how to apply them are the key differences between Alexander the Great walking past a curious black liquid oozing from the earth and the multi-trillion dollar hydrocarbon industry today. In that time and because of these energy technologies, we have grown from being able to support some 200 million human lives to 8 billion.

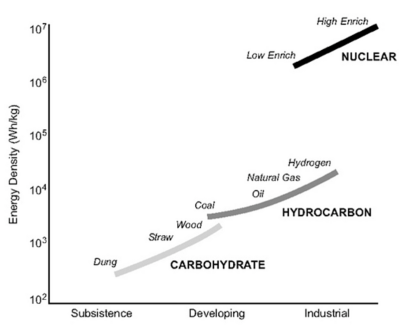

The lens of entropy can also be applied to these energy resources themselves (both technologies and fuels). Some are inherently lower entropy than others. Lower entropy in two senses: density and control.

Density: if entropy tells us how the energy of a system is distributed among its parts, then dense energy resources are inherently lower entropy. Density can be defined in multiple ways, but the two most relevant are the energy content per unit of mass (joules / kilogram) and the rate of energy flow per unit of land use (watts / meter2). A kilogram of wood contains 16 megajoules of energy. That same mass of natural gas, 55 megajoules. A kilogram of Uranium-235, however, contains 3,900,000 megajoules, or 3.9 terajoules. In terms of land use, the last nuclear power plant in California, Diablo Canyon, utilizes 12 acres to produce an average of 2.0 GW (6% of the state’s electricity generation). The state’s largest wind farm, Alta Energy Wind Center, is spread across 32 thousand acres and produces less than 1/5 the kilowatt-hours.

Control: the extent to which we can dispatch the energy resource also relates to entropy. Nuclear power plants can be energized on demand; but we cannot call on the wind to blow. Alta Energy Wind Center boasts an average capacity factor of less than 25% (meaning despite its 1.6GW nameplate capacity, the production of the wind farm averages less than 0.4MW over the course of the year). Diablo Canyon on the other hand maintains an average capacity factor of ~90%. It’s no surprise grids often maintain substantial back-up generation just in case these intermittent resources fail to deliver. But more on randomness and entropy later.

These dimensions make the historical trend toward dense and reliable energy resources all the more obvious and the recent resurgence of high entropy infrastructure all the more puzzling.

Among other things, Bitcoin itself is an energy technology. Proof-of-work offers a way to monetize electricity (that most fungible form of energy) anywhere at any time. The engine of Bitcoin seeks to maximize its own adoption and security by offering everyone in the world the prospect of a financial return. Such competitive commodity production has caused a global arms race for the lowest-cost energy, revolutionizing the way humans think about waste and energy systems. An ASIC is a heat sink for the energy sector in the most literal sense. It is dense and controllable, soaking up highly ordered energy that might otherwise be wasted, shedding hot air in the process.

But whether from Bitcoin or other technologies, this waste heat isn’t for naught. It is the cost of preserving order. It keeps us alive. In a thermodynamic sense, life is a process that consumes energy to maintain and create order. This is as true within our bodies as it is without. Whether the final fuel be ATP (Adenosine triphosphate) or electricity. Perhaps, as Gigi previously observed, Bitcoin’s ceaseless use of energy to grow and preserve itself is proof that Bitcoin is a living organism.

The global technological habitat we’ve created is no different. Buildings, bridges, roads, and hospitals all decay. So too do power lines and powerplants. It is only by converting energy to repair and maintain this infrastructure that we can preserve that which keeps us alive. Energy is the cost of homeostasis. And there is no conversion without waste.

So to create order is to reduce entropy locally, thereby shedding an even greater amount of entropy elsewhere. It follows that increasing levels of order (complexity) require increasing levels of energy use. For this reason, physicists like Eric Chaisson measure complexity using energy rate density, or the flow of energy per unit of matter (measured in nanoJoules per second per gram).

As I’ve written elsewhere, “life is order.” And Chaisson agrees that life seems to increase its rate of energy use as it evolves, as it becomes more complex. Plants as they evolved from gymnosperms, to angiosperms, to tropical grasses. Societies as they mature from hunter-gatherers, to agriculturalists, to industrialists, and now technologists. With each successive step in evolution, a higher rate of energy usage per unit mass. He even notes a similar trend among stars.

In the context of entropy, this is intuitive. To create and preserve increasing degrees of order, more and more energy must flow into (and out of) the system.

But to what end? If there is a natural tendency towards disorder, towards increasing entropy, why does order develop at all? Years ago, Dhruv Bansal blessed me with another life-changing idea (he’s done this before). Perhaps there is a fourth law of thermodynamics: that the universe solves for the path of steepest entropy ascent. In his words, perhaps the universe maximizes the rate of entropy production by creating ordered, hierarchical structures within an ever growing space of possibilities. Order is a means to this end. To reduce entropy locally, is to increase entropy globally. Perhaps life builds upon itself, with the unstated objective of accelerating the natural journey toward disorder.

If true, this fourth law has some interesting implications.

First, there is a definitive direction of the universe (even if we do not fully understand the path).

Second, the most dense energy technologies will eventually be selected for, as they are most adept at increasing entropy.

Third, order will accelerate. Life will continue to climb on top of itself, harnessing more and more energy, shedding more and more entropy, more and more heat. To create order. To create itself. The project is sisyphean, but we are that project.

And if our civilization returns to dark ages anew, it will only be a temporary detour. The unused fuels will just be combusted at a later date when our successors have redeveloped the tools and collective will to do so.

Such a law makes a technology like Bitcoin seem all the more inevitable. The game theory of harnessing moar energy to create money is such an effective way to increase entropy that its emergence feels inevitable. Perhaps once again, Dhruv saw further in predicting the inevitability of proof-of-work-based moneys for sufficiently intelligent life forms.

There is yet another dimension of entropy to consider: information. Here, entropy refers to randomness or noise. Still disorder, just in bits not joules.

We rely on this entropy when generating private keys. We roll die and look to other forms of natural randomness (“adding entropy”) to ensure the secret that unlocks our bitcoin is sufficiently unpredictable. Security through obscurity. This same randomness is also supposed to keep our personal information private and our bank accounts secure from malicious third parties; though, of course, we cannot verify this for ourselves.

And so if informational entropy is randomness, its opposite is order. To reduce entropy is to parse out the signal from the noise.

Again, we must look to bitcoin mining. ASICs dig through mathematical space to find valid blocks, rolling the dice of the cryptographic hash function until they produce the order of a hash below the difficulty target. Much hot air is shed from heat sinks on hash boards (as can be felt in mining farm “hot aisles” around the world). But it is through this process that Bitcoin verifiably links the digital and physical worlds. The nonce is the residue of this process; proof that energy was in fact “spent.” And once this block is added to the chain, the nonce lives on through the energy consumption of full nodes. One can only preserve the order of bits by consuming joules. Storage too comes at a cost.

This idea extends to intelligence, which can be defined as the ability to reduce informational entropy. Gigi first enlightened me with this idea over red lagers in a Latvian basement; my appreciation for its power has only grown since. Considering entropy in the thermodynamic sense, the idea is intuitive. Much like plants distill sunlight and matter into more dense carbohydrates, intelligence distills vast amounts of information into dense, useful theories. Intelligence is finding signal in the noise. In Gigi’s words, “it is the lossless compression of truth.”

This is perhaps what Plato was after when he introduced his theory of the Forms. That there exists a pure, unadulterated essence of what a thing is. The expression of an idea with least possible entropy. The theoretical lower bound. Even if we, as humans, can never access it.

Forms aside, we still strive to reduce informational entropy. To create intelligent theories that are dense, useful, and true.

“Yet without obscurity

or needless explanation

the true prophet signifies.”

— Heraclitus

We spend our whole lives processing stimuli so that we can become more intelligent, more adept at bringing about desired outcomes. We project ourselves out into the future to ensure a desirable state of the world, reflecting on the past to inform such projections. Much sweat is shed in the process; much heat. Our brain consumes 20% of our energy intake despite only amounting to 2% of our body mass. And as this nutritional energy is processed and converted, global entropy increases.

The power of such intelligence is obvious. So of course people attempt to abstract concepts incorrectly. We must be weary of the human urge to oversimplify. One need only peruse an airport bookstore to see this in the real world; the vast wasteland of pop non-fiction, countless pages expressing singular, pedestrian ideas that lack robust, explanatory power. Such elongated blog posts could hardly be accused of being dense; or perhaps they could.

Once you see entropy, it’s hard not to see it everywhere.

I’m sitting outside on a Saturday morning, thinking about how to make this essay less shit; which is to say, higher signal. My cup of coffee is again cooler now than when I poured myself a refill. The cigarette in my hand dissolves, slowly incinerating to ash. The smoke dissipates in undulating currents until the breeze blows it away altogether, irreversibly mixing with the atmosphere. The smoke, ash, and butt are far less ordered than they were when I opened the pack. I consider the energy that grew the tobacco via photosynthesis, the fuel and machines that powered its harvesting, the distillation of paper and plant into a fully-formed dart. And if something doesn’t kill me first, one day the carcinogens will mutate my genetic code, wreaking the havoc of entropy on my biological information, until my body consumes itself.

I take one last drag. The entropy has to go somewhere.

But there are still more aspects to entropy, more secrets to uncover.

“All forms of wealth are alike in that they are thermodynamically improbable.”

— John Constable

The Encyclopedia Britannica defines “economics” as the “social science that seeks to analyze and describe the production, distribution, and consumption of wealth.” As tends to happen with rabbit holes, this definition leads us to yet another: that of “wealth”. Here we look to John Constable (emphasis added throughout):

“When we talk about… valued objects we are talking about ‘wealth’, in the archaic Anglo-Saxon meaning of something that augments human well-being, wealth. So, “Wealth” is a state of the world that increases wellbeing, in other words that satisfies or is likely to satisfy some human requirement (or “demand” to use the standard term in economics). These states of the world vary in character to an extraordinary degree, from a glass of cool water in the desert, to a mug of hot tea on a cold night in Northumberland, from a roof over your head, to the floor beneath your feet; from the engine that makes your car move, to the brakes that stop it; from the sandwich on the shelf in the supermarket when you want it; to the sewerage that carries the digested remains safely away when you have finished with it.”

This broad definition includes intangible forms of wealth such as language, intellectual traditions, and information itself. Elsewhere Constable notes that one commonality underlies all such examples: “they are all, without exception, improbable.” They are all “physical states far from thermodynamic equilibrium, and the world was brought, sometimes over long periods of time, into these convenient configurations by energy conversion, the use of which reduced entropy in one corner of the universe, ours, and increased it by an even larger margin somewhere else.”

That is to say, all wealth creation is entropy reduction, in the most literal sense. Consider the chair you are sitting on. The non-human world rarely solves for lower back support. The chair was made using energy and raw materials. If wooden: a tree somewhere in the world was chopped down, transported many miles, and fashioned into a shape that so pleases the human tush. If another material: hydrocarbons were likely used either to power the factory that made the chair or even produce the material of the chair in the first place. Each link in this supply chain was thermodynamically improbable.

So wealth too is a function of energy. We rearrange matter to a more improbable configuration so that we might satisfy our desires. This, without exception, requires energy. Therefore, all existing wealth embodies past energy conversions.

Such realizations shed new light on other foundational economic concepts.

If all economic growth is entropy reduction, then the capital stock of the economy (“K” in economic notation) represents not only previous (local) reductions in entropy but also our capacity for yet more. This is particularly true in the case of energy infrastructure. We can only reduce entropy to the extent we have power plants and supply chains to fuel them. By shutting down dense base load generation, we are limiting our ability to create wealth. Even to preserve our own existing order.

Even money becomes just another reflection of entropy.

If all wealth creation is entropy reduction, then “financial wealth” under a sound money standard can be thought of as a measure of the entropy you have reduced for others, which is the value you have provided. AJ Scalia was first to see this connection.

Money itself is also a means for reducing informational entropy. It helps us communicate useful economic information via the pricing mechanism. Under sound money, this communication is emergent, organic, and high signal so that economic calculation can be more accurate.

But the key function of money is to transfer value into the future. In AJ’s words, it is that good which minimizes uncertainty for the possessor. And, to quote Roy Sebag, we want money that is “superior in its resistance to entropy through time.” This entropy can take many forms: physical decay, human conflict, and inflation among them.

From the entropy of fiat, Bitcoin instantiates order.

But whether money is sound or not, we are still forced to grapple with the future.

We work today, so that tomorrow we don’t have to. We save any surplus for future consumption. And we only invest our money if there is a sufficiently attractive rate of return to forgo instant gratification or the optionality of savings. Even if, as in our current system, “sufficiently attractive” might mean a return high enough to avoid losing purchasing power to inflation. Such is the treadmill of fiat.

This relative valuation of the present to the future is referred to as time preference. The only reason we have the capital necessary to reduce entropy in the human sphere is because, in the past, individuals’ time preferences were sufficiently low to invest for tomorrow.

But what does it mean? Past, present, and future. Once again we find entropy in the answer.

All of the above concepts are mediated through time.

Energy is the capacity to do work, an act that can only be done over time. Of the five primary mathematical expressions of the joule, all contain a variable expressing time. Power is energy over time (a watt is one joule per second). The second law of thermodynamics itself is defined as entropy’s increase over time; or more precisely, the fact that it does not naturally decrease (in mathematical notation: dS/dt = 0, where S = entropy and t = time.)

It is only from experience (time) that we are able to parse out the signal from the noise, which is to say intelligence also is a function of time (a point Kant would contest, despite his punctuality).

And “the production, distribution, and consumption of wealth,” the focus of the field of economics, is also time-dependent. The former three nouns refer to processes over time, while the latter (“wealth”) refers to states of the world at different points in time. Perhaps this is obvious, as economics is a human study, and we ourselves are temporal beings. We are inherently situated in time.

Consequently, humans have long wrestled with the concept of time. Does anything exist outside of the present? Or is time just another dimension to be mastered and traversed, if only we had the tools? Many careers have been devoted to these and other profound questions. But I will once again frustrate the philosophic reader by sidestepping the rigor these questions deserve and — like the smooth brain I am — go back to the things themselves.

Time. What is it? That thing that passes. But we are so steeped in the concept that we often mistake its measure for the thing itself. Time is not to be found in seconds; seconds are just a tool we invented to understand it.

“Time is not a reality [hupostasis], but a concept [noêma] or a measure [metron]…” — Antiphon the Sophist — Gigi

Things change. And we measure this change through regularly repeating mechanisms, which is to say, changes we understand. It seems likely this is how humanity first measured time: night and day, winter and summer. The same is true for all clocks. The sun moves, casting a shadow in its wake. Gears go round, turning the hands of a watch. Electrons jump, moving digits. These marginal processes define what we call time.

“The sun, timekeeper

of the day and season,

oversees all things.”

— Heraclitus

And by the seventeenth century AD, we were so familiar with the concept of time–using predictable change to quantify other changes–that Newton abstracted it to better predict the movement of bodies (earthly and celestial). In doing so, he invented time with a capital “T.”

“Absolute, true, and mathematical time, of itself, and from its own nature flows equably without regard to anything external” — Isaac Newton

But it now appears this abstraction was not as universal as Newton had thought.

The special relativists say that time moves faster or slower depending on the speed of the clock and the massive bodies surrounding. Two twins are born. One travels near the speed of light to distant lands, the other stays tending to his crops. When the prodigal son returns, he is far younger than his brother; his watch has ticked far fewer times. We even see this phenomenon on planes traveling far slower than the speed of light.

Not only does this mean that time can move at different speeds, it also renders the idea of a singular “now” throughout the universe obsolete. What would “now” mean to someone millions of lightyears away? There is no hope of synchronizing due to the inherent latency in any communication that would verify the experience shared. While this doesn’t much affect our lives today, it does reveal the true nature of what we mean when we ask “what time is it?”

Clocks were invented for human coordination. Clocktower bells once rang throughout towns to synchronize human activities. Now, the “correct” time is pushed to our phones and laptops from centralized servers. But how can we trust the clock of another?

Again we look to Gigi. In Bitcoin is Time, he explains Bitcoin’s role as a decentralized clock. The chain of blocks imposes chronological order in a trustless way. It establishes a verifiable now with the chain tip. The difficulty adjustment self regulates to ensure this now doesn’t move forward too quickly. And anyone in the world can run a node and see it for themselves. It is the most trustless now we have.

Things change. And time is the tool man invented to measure it. Time, capital T, is an abstraction, but one that surely has proven useful.

So there is no universal Time. But surely time still mediates our experience, it still flows. Or at least seems to. We call this flow the “arrow of time.” But where does it come from?

Most of the fundamental laws of physics are symmetrical with respect to time. The equations discovered to explain gravity, motion, the conservation of mass and energy, and electricity would all work just as well if the arrow of time was flipped; if the world moved backwards.

What then gives time its direction? The second law of thermodynamics may once again hint at an answer.

The only fundamental law of physics that is not symmetric with respect to time is entropy. As time passes, entropy increases. As we’ve discussed previously, entropy is probabilistic. It’s not that the gas particles can’t naturally regroup into a tight bundle in the corner of a box, it’s just that they don’t. While this might not be causation, this correlation might be the best clue we have.

“Energy and time are conjugates. They are tied to each other.” — Carlo Rovelli

So entropy, the flow that powers life, is the tendency for order to degrade over time; and the arrow of time might itself just be the arrow of increasing entropy. The astute reader will notice the circularity. Perhaps it is an indication of incomplete knowledge. Perhaps an indication of great mysteries beyond our comprehension. The answer might be found tomorrow, that sweetest of things that never comes.

“Whence all creation had its origin,

the creator, whether he fashioned it or whether he did not,

the creator, who surveys it all from highest heaven,

he knows — or maybe even he does not know.”

— The Nasadiya Sukta, The Rigveda

And as it turns out, energy suffers from a similar problem. There is no method for measuring energy directly. Again, it is a quality and not a substance.

Despite our ceaseless use of it, we can only attempt to understand energy through indirect means. In electricity, we use volts and amps to derive it. In nutrition, the calorie (literally how much heat is created by burning the food). But we still can’t see energy, the thing itself.

My conversations with Rete Browning often result in me staring at equations I haven’t considered since college (and worse still, doing algebra). Chatting with him about this essay proved no exception. As Rete revealed, if you drill into the maths, you see that four of the five primary mathematical expressions of the joule boil down to the movement of some mass over some distance during some time period (with the exception requiring the inclusion of electric charge). Our measurements boil down to what we can see. All else is an abstraction. Energy is no exception.

Perhaps this makes sense. Energy is potential. When we think about energy, about power, we really are thinking about the potential for useful work. How we might rearrange matter to our benefit. And if “energy is the only universal currency,” then the universe as a whole is shaped by this potentiality, by what could be. Potentiality takes shape and is reshaped, degrading with each transformation but — in rare instances — becoming manifest as order.

Life has mastered harnessing this potentiality to increasing degrees over time. We as humans have so excelled at this, that we can sit on the couch while our energy infrastructure refines the lifeblood of the universe for us, supporting billions of lives, creating tremendously improbable states of matter, and shedding many zetawatt-hours of waste heat as a result.

Things change. We have spent all of human history trying to understand this change. And, if such things exist, that which doesn’t change. Our measurements and “laws” are downstream of this endeavor. They are imperfect, but they are the most perfect tools we have. The strongest signal we can induct. To paraphrase the 13th century Buddhist teacher, Dōgen Zenji, “before one studies… mountains are mountains and waters are waters; after a first glimpse into the truth… mountains are no longer mountains and waters are no longer waters; after enlightenment, mountains are once again mountains and waters once again waters.”

So where does this leave us?

Time passes, which is to say, things change. As they change, the amount of energy is conserved but its order decreases; the energy becomes more diffuse, more random. Amidst this backdrop, we enjoy these brief instances of order. We harness energy so that we might sustain and increase them. Both in number and complexity. In doing so, perhaps we are fulfilling our pre-ordained mission as agents of entropy, shedding greater and greater amounts of disorder into the universe. But these precious instances of order remain brief nonetheless.

A son is born. A father is told he will soon die. This is the way of the world.

“I am Time, the mighty cause of world destruction;

Who has come forth to annihilate the worlds.”

— The Bhagavad Gita

And as time passes, we live and create. We accelerate the inevitable trend of entropy, only to find that entropy—this trend toward disorder—might actually lie at the heart of time itself.

This —to me at least —seems to be the nature of things. We are riding the past energy conversions of mankind. We are left to decide whether we want this to be a local maximum before returning to a new dark age, or if we have the will to flourish, to create beautiful, ordered complexity— an endeavor that necessitates the acceleration of entropy elsewhere. Perhaps the decision has already been made for us. Only time will tell.

Originally published in The Bitcoin Times