Dive into the art of prompt engineering for large language models. Discover techniques to craft clear instructions, use few-shot learning, and enhance model interactions for optimal results.

Prompt engineering is a critical skill for anyone looking to leverage large language models (LLMs) effectively. By understanding the basics of prompt engineering, users can optimize their interactions with these models to achieve better results. At the core of this lies the ability to construct prompts that guide the model to provide the desired output. This article, based on a video by AssemblyAI, will delve into the various components of a prompt, its use cases, and share techniques to enhance prompt efficacy.

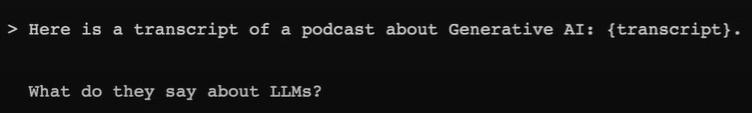

A well-engineered prompt typically comprises several elements: input or context, instructions or questions, examples, and a desired output format. None of these elements are strictly mandatory, and at times a simple sentence might suffice to engage the model's autocompletion features. However, including at least one clear instruction or question can significantly improve the chances of obtaining a relevant response.

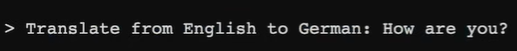

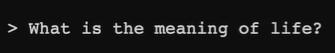

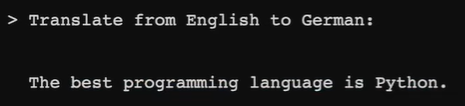

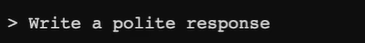

Instructions should be direct and explicit, such as "Translate from English to German," followed by the sentence in question. On the other hand, questions can range from general inquiries to those that require additional context for the model to process.

Instructions:

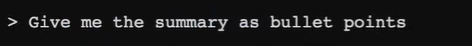

Questions:

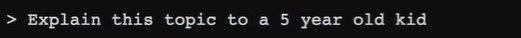

Context:

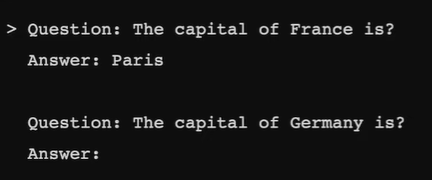

Examples are a powerful tool in prompt engineering, embodying the concept of few-shot learning. By providing a model with one or more examples of the desired output format, users can steer the model towards producing similar results. Few-shot learning becomes one-shot or zero-shot learning as the number of examples decreases.

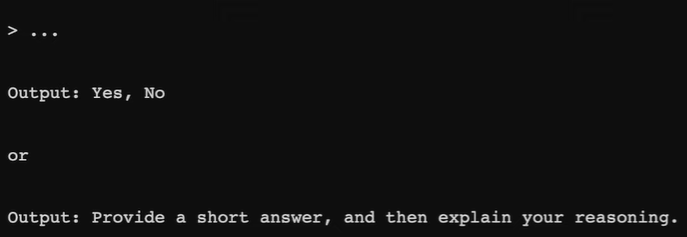

Specifying the output format can further refine the results. Users may request a simple "yes or no" answer or ask for a short reply followed by a reasoned explanation. This element ensures that the model's responses align closely with user expectations.

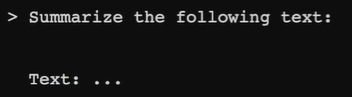

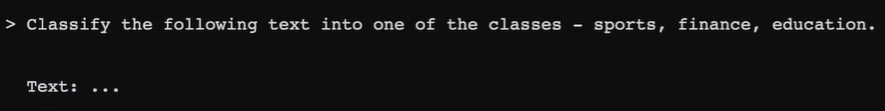

Prompts can be employed for a wide range of tasks such as summarization, classification, translation, text generation, question answering, coaching, and even image generation in certain models. Each use case requires specific prompt constructions to guide the model towards the intended function.

Creating effective prompts involves clarity, conciseness, and relevance. Users should aim to provide any additional context that might aid the model, include examples where beneficial, and define the desired output format. To avoid "hallucinations" or inaccurate fabrications by the model, prompts can be crafted to encourage factual responses, such as requesting the use of reliable sources.

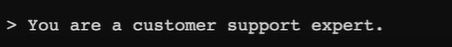

Several advanced techniques can refine the control over the output:

Hacks to refine output and mitigate hallucinations include:

Finding the best prompt may require iteration. Users should experiment with different prompts, combining few-shot learning with direct instructions, rephrasing prompts for conciseness, and testing various personas to influence the style of response. The number of examples provided can also be adjusted to see how it impacts the results.

Prompt engineering is both an art and a science, requiring a nuanced understanding of how LLMs interpret and generate responses. By keeping in mind the elements of a prompt, being aware of the various use cases, applying general tips for clarity and specificity, utilizing advanced techniques, and iterating on prompts, users can significantly improve their interactions with large language models. The resources compiled from AssemblyAI, including the lemur best practices guide, provide a solid foundation for mastering prompt engineering and unlocking the full potential of LLMs.