If you trust the average ‘AI bro’ on Twitter, you’ll believe that you can just upload a few PDF’s, a couple of books, a podcast or company’s financial report, to “train your own AI”. This is completely false.

As should be evident now, training a large language model is no small task. Researchers and companies have been testing out new techniques and developing an understanding of what yields better results, but it’s still early days. Like I’ve said, it is very much both an art and a science. Perhaps more so in our case, since the fundamental paradigm the Satoshi models are meant to reflect are opposite or out of phase with just about every kind of model out there right now, whether open or closed source. How we dealt with these challenges is discussed in more details in this post.

In this article, I’d like to help elucidate the differences between training, tuning and augmentation - which is often mis-referenced as a way to “train your own model”.

There is a lot of misinformation out there regarding “training” models.

If you trust the average ‘AI bro’ on Twitter, you’ll believe that you can just upload a few PDF’s, a couple of books, a podcast or company’s financial report, to “train your own AI”.

This is completely false.

For a number of reasons, two of them being:

We will explain what this all means, and the different options one has with respect to training a model, fine-tuning it, or augmenting an existing model with a semantic database that it references.

Large Language Models are AI models that can understand and generate human-like text. Unlike more focused AI applications, LLM training doesn't target a specific domain or task; instead, it aims to develop a comprehensive understanding of language, often across multiple languages and contexts. This training involves feeding vast amounts of quality* text data into a model, enabling it to learn patterns, nuances, and structures of language.

Notice the use of the word “quality” here. Quality is a subjective term. In the context of data for LLM training, quality refers to how representative the training data set is, with what you want the model to output later.

The process of LLM training is complex and multifaceted. It's not just about accumulating data but also about preparing it in a way that's conducive to learning the intricacies of language.

Imagine it as teaching a child language by exposing them to an extensive library of books, conversations, and writings, but at a scale and speed that’s only possible in the digital world.

LLM training is about creating a foundation of language understanding that's broad and deep. The trained model should be capable of not just repeating what it has seen but also generating new, coherent, and contextually-appropriate content.

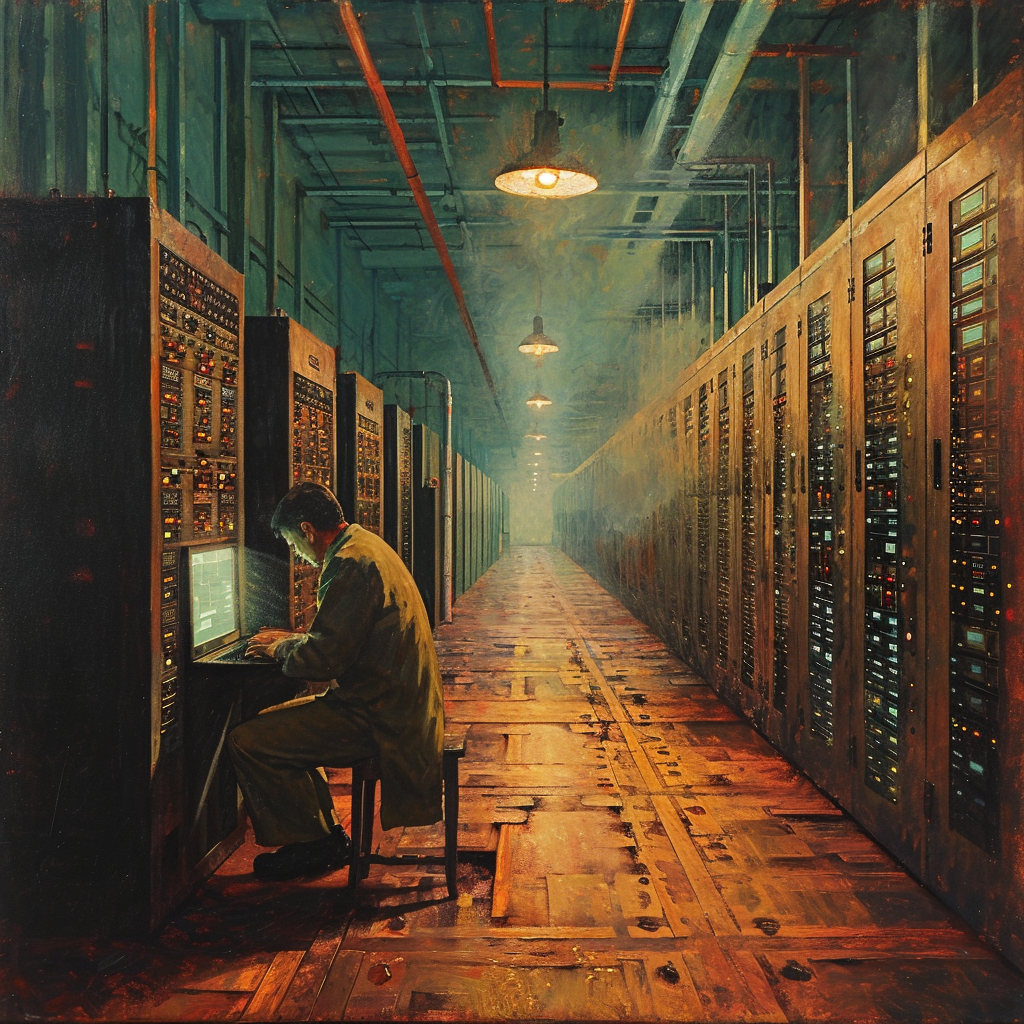

The computational requirements for training an LLM are not trivial. High-end GPUs or TPUs, often available only to well-funded organizations or research institutions are needed. And of course, this all requires energy. The process is not only data-intensive, but compute-intensive. At the micro level, this is not a concern. Companies like us just plug into what’s available, use “free credits” from cloud providers where possible, and seek compute through whatever means possible (centralized or decentralized like GPUtopia).

Of course, at the macro level, this is a concern, and it will be interesting to see what the Bitcoin industry can teach the AI industry when it comes to the efficient scaling of compute.

All this is to say that when people tell you that “you can train a model on your own data” - they have absolutely no idea what they’re talking about.

Fine-tuning particularly follows the pre-training phase of the LLM development cycle, although in computational terms, it’s quite similar. The difference here is specificity of the data and the time involved. It's akin to honing a broadly-educated mind to specialize in a specific field. After an LLM has been pre-trained on a vast, general dataset to understand language, fine-tuning adjusts the model to excel in specific tasks or comprehend particular domains.

Think of fine-tuning as customizing an all-purpose tool to perform specific jobs with greater efficiency and accuracy.

This phase is crucial for tailoring a model to specific needs, whether it's understanding medical terminology, generating marketing content, engaging in casual conversation, or in our case, speaking like a bitcoiner!

Fine-tuning shifts the focus from a general understanding of language to specialized knowledge or capabilities. It actually involves retraining the model, but now with a dataset that's closely aligned with the intended application.

There are three “general” categories for fine tuning. Once again, this is not 100% the case, all of the time. It’s just a useful way to understand it.

The choice between full fine-tuning, LORA, and partial fine-tuning depends on several factors:

The final stage in LLM development focuses on aligning the model with specific human standards and preferences. This 'last mile' stage is essential for refining the model’s decision-making capabilities and ensuring its outputs align with desired outcomes, particularly in terms of relevance, style and accuracy.

Imagine this as the final tuning of a high-performance engine, ensuring it not only runs smoothly but also responds precisely as intended.

There are two main options for reinforcement learning, and a blend of both can be used. Let’s look at each.

RLHF requires human feedback to build a reward model, in order to then further LLM refinement. The steps are:

Very similar to RLHF, except we use existing LLMs to get the feedback. This is of ultimately lower quality, but also lower cost in time and money.

Reinforcement Learning, whether RLHF, RLAIF or some blend, plays a vital role in the final stages of LLM development. It is the last mile alignment stage, and really puts the icing on the cake, so to speak.

Now that we have the training stages out of the way, let’s look at what most people erroneously call “training” today, and understand why it is fundamentally different to training a model, but still useful in particular contexts.

Retrieval Augmented Generation (RAG) is the most popular way to augment or enhance a model, so we’ll put our focus here. RAG is a novel way to get a model to produce responses that are more accurate or “relevant” to a domain or point of view. Contrary to popular belief, RAG has nothing to do with actually “training a model”. Instead, it focuses on augmenting the capabilities of an existing model. This augmentation is achieved not through retraining with new data, but by enhancing its responses through a sophisticated use of embeddings and external data retrieval.

Think of it as a smart way to do dynamic prompting by abstracting away the context injections using semantic tooling.

It’s a bit like asking a model to answer a question by referencing some specific context you pasted into the prompt. Imagine you just copied a relevant section from a book, pasted it into ChatGPT, then asked the model to answer a question by referencing that context. It’s actually pretty simple, conceptually speaking.

In fact, most people who use ChatGPT (or any other model) do this already, only somewhat manually. They make sophisticated prompts so that the model can reply more accurately. RAG just allows you to do it dynamically and programmatically. It abstracts away the manual process.

RAG enhances AI applications by allowing them to dynamically access and incorporate information from external databases. This method effectively broadens the AI’s knowledge base without altering its foundational training. The core AI model, already trained on a substantial dataset, is coupled with a retrieval system that fetches relevant information from a vast external database in response to specific queries or content requirements.

RAG is valuable in areas where AI responses need to be supplemented with up-to-date or specialized information. However, RAG’s effectiveness hinges on the quality of the external data sources and the system's ability to accurately match query embeddings with relevant information. The complexity of setting up and maintaining such a system, especially in dealing with vast and continually updating data sources is precisely where things get challenging.

Furthermore, the core model has not been changed, so it’s not producing anything novel or unique. The model is still the same underlying model, and as soon as a question is asked that’s outside of what’s in the vector store, it will revert to default, or not answer.

If your goal is a little widget, this is a useful solution. If your goal is to reference an internal document more easily, or perhaps turn your company FAQ’s into something that you can reference conversationally, then great. But this is not a new model, and it will not perform as well as a fully trained model will.

In summary, LLM training is a powerful but resource-intensive process aimed at creating AI models with a broad and deep understanding of human language. It's a complex endeavor that combines data science, machine learning, and linguistic expertise, resulting in models that can interpret and generate human-like text across various contexts and applications. The training process not only shapes the capabilities of the model but also sets the limitations within which it operates.

Fine-tuning allows for the customization of a general model to meet specific needs and perform specialized tasks. It can be done via a low-resource approach like LoRA or as a full update to the model's parameters. Reinforcement learning is the last mile of the process, and aligns the model.

Finally RAG, or other approaches to augmentation, are not training, but enhancements or wrappers on models which are great for very narrow applications, prototyping and demonstrations.

Understanding these different elements and their implications is key to leveraging the full potential of AI in a targeted and efficient manner.

If the convergence of AI and Bitcoin is a rabbit hole you want to explore further, you should probably read the NEXUS: The First annual Bitcoin <> AI Industry Report. It contains loads of interesting data and helps sorting the real from the hype. You will also learn how we leverage Bitcoin to crowd-source the human feedback necessary to train our open-source language model.